Arch Linux Install Docker

- Arch Linux Install Docker

- Arch Linux Install Docker Cli

- Arch Linux Install Docker Virtualbox

- Arch Linux Install Docker Ubuntu

- Install Docker In Arch Linux

Docker is a utility to pack, ship and run any application as a lightweight container.

Installation

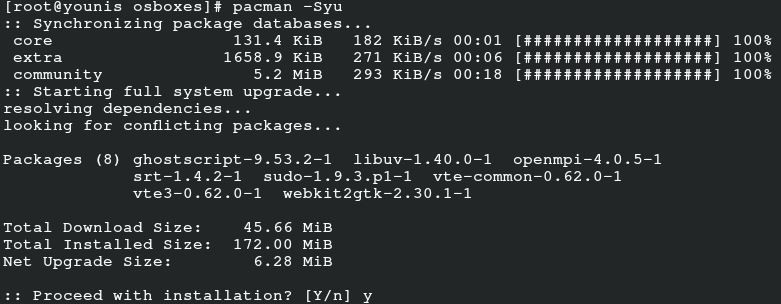

Arch Linux Downloads Release Info. The image can be burned to a CD, mounted as an ISO file, or be directly written to a USB stick using a utility like dd.It is intended for new installations only; an existing Arch Linux system can always be updated with pacman -Syu. Install docker on your Linux distribution Choose your Linux distribution to get detailed installation instructions. If yours is not shown, get more details on the installing snapd documentation.

Install Yay AUR Helper in Arch Linux How to Use Yay in Arch Linux and Manjaro. Once you have yay installed, you can upgrade all the packages on your system using the command. $ sudo yay -Syu To include development packages during the upgrade run. $ yay -Syu -devel -timeupdate As with any other AUR helpers, you can install the packages using. Arch Linux instance with SSH access; A sudo user configured; Stable internet connection; Step 1) Install Docker on Arch Linux. There are various ways that you can use to install Docker.

Install the docker package or, for the development version, the docker-gitAUR package. Next start and enable docker.service and verify operation:

Note that starting the docker service may fail if you have an active VPN connection due to IP conflicts between the VPN and Docker's bridge and overlay networks. If this is the case, try disconnecting the VPN before starting the docker service. You may reconnect the VPN immediately afterwards. You can also try to deconflict the networks (see solutions [1] or [2]).

Next, verify that you can run containers. The following command downloads the latest Arch Linux image and uses it to run a Hello World program within a container:

If you want to be able to run the docker CLI command as a non-root user, add your user to the dockeruser group, re-login, and restart docker.service.

docker group is root equivalent because they can use the docker run --privileged command to start containers with root privileges. For more information see [3] and [4].Usage

Docker consists of multiple parts:

- The Docker daemon (sometimes also called the Docker Engine), which is a process which runs as

docker.service. It serves the Docker API and manages Docker containers. - The

dockerCLI command, which allows users to interact with the Docker API via the command line and control the Docker daemon. - Docker containers, which are namespaced processes that are started and managed by the Docker daemon as requested through the Docker API.

Typically, users use Docker by running docker CLI commands, which in turn request the Docker daemon to perform actions which in turn result in management of Docker containers. Understanding the relationship between the client (docker), server (docker.service) and containers is important to successfully administering Docker.

Note that if the Docker daemon stops or restarts, all currently running Docker containers are also stopped or restarted.

Also note that it is possible to send requests to the Docker API and control the Docker daemon without the use of the docker CLI command. See the Docker API developer documentation for more information.

See the Docker Getting Started guide for more usage documentation.

Configuration

The Docker daemon can be configured either through a configuration file at /etc/docker/daemon.json or by adding command line flags to the docker.service systemd unit. According to the Docker official documentation, the configuration file approach is preferred. If you wish to use the command line flags instead, use systemd drop-in files to override the ExecStart directive in docker.service.

For more information about options in daemon.json see dockerd documentation.

Storage driver

The storage driver controls how images and containers are stored and managed on your Docker host. The default overlay2 driver has good performance and is a good choice for all modern Linux kernels and filesystems. There are a few legacy drivers such as devicemapper and aufs which were intended for compatibility with older Linux kernels, but these have no advantages over overlay2 on Arch Linux.

Users of btrfs or ZFS may use the btrfs or zfs drivers, each of which take advantage of the unique features of these filesystems. See the btrfs driver and zfs driver documentation for more information and step-by-step instructions.

Daemon socket

By default, the Docker daemon serves the Docker API using a Unix socket at /var/run/docker.sock. This is an appropriate option for most use cases.

It is possible to configure the Daemon to additionally listen on a TCP socket, which can allow remote Docker API access from other computers. This can be useful for allowing docker commands on a host machine to access the Docker daemon on a Linux virtual machine, such as an Arch virtual machine on a Windows or macOS system.

Note that the default docker.service file sets the -H flag by default, and Docker will not start if an option is present in both the flags and /etc/docker/daemon.json file. Therefore, the simplest way to change the socket settings is with a drop-in file, such as the following which adds a TCP socket on port 4243:

Reload the systemd daemon and restartdocker.service to apply changes.

HTTP Proxies

There are two parts to configuring Docker to use an HTTP proxy: Configuring the Docker daemon and configuring Docker containers.

Docker daemon proxy configuration

See Docker documentation on configuring a systemd drop-in unit to configure HTTP proxies.

Docker container proxy configuration

See Docker documentation on configuring proxies for information on how to automatically configure proxies for all containers created using the docker CLI.

Configuring DNS

See Docker's DNS documentation for the documented behavior of DNS within Docker containers and information on customizing DNS configuration. In most cases, the resolvers configured on the host are also configured in the container.

Most DNS resolvers hosted on 127.0.0.0/8 are not supported due to conflicts between the container and host network namespaces. Such resolvers are removed from the container's /etc/resolv.conf. If this would result in an empty /etc/resolv.conf, Google DNS is used instead.

Additionally, a special case is handled if 127.0.0.53 is the only configured nameserver. In this case, Docker assumes the resolver is systemd-resolved and uses the upstream DNS resolvers from /run/systemd/resolve/resolv.conf.

If you are using a service such as dnsmasq to provide a local resolver, consider adding a virtual interface with a link local IP address in the 169.254.0.0/16 block for dnsmasq to bind to instead of 127.0.0.1 to avoid the network namespace conflict.

Images location

By default, docker images are located at /var/lib/docker. They can be moved to other partitions, e.g. if you wish to use a dedicated partition or disk for your images. In this example, we will move the images to /mnt/docker.

First, stopdocker.service, which will also stop all currently running containers and unmount any running images. You may then move the images from /var/lib/docker to the target destination, e.g. cp -r /var/lib/docker /mnt/docker.

Configure data-root in /etc/docker/daemon.json:

Restart docker.service to apply changes.

Insecure registries

If you decide to use a self signed certificate for your private registries, Docker will refuse to use it until you declare that you trust it. For example, to allow images from a registry hosted at myregistry.example.com:8443, configure insecure-registries in the /etc/docker/daemon.json file:

Restart docker.service to apply changes.

IPv6

In order to enable IPv6 support in Docker, you will need to do a few things. See [5] and [6] for details.

Firstly, enable the ipv6 setting in /etc/docker/daemon.json and set a specific IPv6 subnet. In this case, we will use the private fd00::/80 subnet. Make sure to use a subnet at least 80 bits as this allows a container's IPv6 to end with the container's MAC address which allows you to mitigate NDP neighbor cache invalidation issues.

Restartdocker.service to apply changes.

Finally, to let containers access the host network, you need to resolve routing issues arising from the usage of a private IPv6 subnet. Add the IPv6 NAT in order to actually get some traffic:

Now Docker should be properly IPv6 enabled. To test it, you can run:

Arch Linux Install Docker

If you use firewalld, you can add the rule like this:

If you use ufw, you need to first enable ipv6 forwarding following Uncomplicated Firewall#Forward policy. Next you need to edit /etc/default/ufw and uncomment the following lines

Then you can add the iptables rule:

It should be noted that, for docker containers created with docker-compose, you may need to set enable_ipv6: true in the networks part for the corresponding network. Besides, you may need to configure the IPv6 subnet. See [7] for details.

User namespace isolation

By default, processes in Docker containers run within the same user namespace as the main dockerd daemon, i.e. containers are not isolated by the user_namespaces(7) feature. This allows the process within the container to access configured resources on the host according to Users and groups#Permissions and ownership. This maximizes compatibility, but poses a security risk if a container privilege escalation or breakout vulnerability is discovered that allows the container to access unintended resources on the host. (One such vulnerability was published and patched in February 2019.)

The impact of such a vulnerability can be reduced by enabling user namespace isolation. This runs each container in a separate user namespace and maps the UIDs and GIDs inside that user namespace to a different (typically unprivileged) UID/GID range on the host. Note that in the Docker implementation, user namespaces for all containers are mapped to the same UID/GID range on the host, otherwise sharing volumes between multiple containers would not be possible.

Note:- The main

dockerddaemon still runs asrooton the host. Running Docker in rootless mode is a different feature. - Processes in the container are started as the user defined in the USER directive in the Dockerfile used to build the image of the container.

- Enabling user namespace isolation has several limitations. Also, Kubernetes currently does not work with this feature.

- Enabling user namespace isolation effectively masks existing image and container layers, as well as other Docker objects in

/var/lib/docker/, because Docker needs to adjust the ownership of these resources. The upstream documentation recommends to enable this feature on a new Docker installation rather than an existing one.

Configure userns-remap in /etc/docker/daemon.json. default is a special value that will automatically create a user and group named dockremap for use with remapping.

Configure /etc/subuid and /etc/subgid with a username/group name, starting UID/GID and UID/GID range size to allocate to the remap user and group. This example allocates a range of 65536 UIDs and GIDs starting at 165536 to the dockremap user and group.

Restart docker.service to apply changes.

After applying this change, all containers will run in an isolated user namespace by default. The remapping may be partially disabled on specific containers passing the --userns=host flag to the docker command. See [8] for details.

Docker rootless

Install the docker-rootless-extras-binAUR package to run docker in rootless mode (that is, as a regular user instead of as root).

Configure /etc/subuid and /etc/subgid with a username/group name, starting UID/GID and UID/GID range size to allocate to the remap user and group.

Enable the socket (this will result in docker being started using systemd's socket activation):

Finally set docker socket environment variable:

Images

Arch Linux

The following command pulls the archlinux x86_64 image. This is a stripped down version of Arch core without network, etc.

See also README.md.

For a full Arch base, clone the repo from above and build your own image.

Make sure that the devtools, fakechroot and fakeroot packages are installed.

To build the base image:

Alpine Linux

Alpine Linux is a popular choice for small container images, especially for software compiled as static binaries. The following command pulls the latest Alpine Linux image:

Alpine Linux uses the musl libc implementation instead of the glibc libc implementation used by most Linux distributions. Because Arch Linux uses glibc, there are a number of functional differences between an Arch Linux host and an Alpine Linux container that can impact the performance and correctness of software. A list of these differences is documented here.

Note that dynamically linked software built on Arch Linux (or any other system using glibc) may have bugs and performance problems when run on Alpine Linux (or any other system using a different libc). See [9], [10] and [11] for examples.

CentOS

The following command pulls the latest centos image:

See the Docker Hub page for a full list of available tags for each CentOS release.

Debian

The following command pulls the latest debian image:

See the Docker Hub page for a full list of available tags, including both standard and slim versions for each Debian release.

Distroless

Google maintains distroless images for several popular programming languages such as Java, Python, Go, Node.js, .NET Core and Rust. These images contain only the programming language runtime without any OS related files, resulting in very small images for packaging software.

See the GitHub README for a list of images and instructions on their use.

Run GPU accelerated Docker containers with NVIDIA GPUs

With NVIDIA Container Toolkit (recommended)

Starting from Docker version 19.03, NVIDIA GPUs are natively supported as Docker devices. NVIDIA Container Toolkit is the recommended way of running containers that leverage NVIDIA GPUs.

Install the nvidia-container-toolkitAUR package. Next, restart docker. You can now run containers that make use of NVIDIA GPUs using the --gpus option:

Specify how many GPUs are enabled inside a container:

Specify which GPUs to use:

or

Specify a capability (graphics, compute, ...) for the container (though this is rarely if ever used this way):

For more information see README.md and Wiki.

With NVIDIA Container Runtime

Arch Linux Install Docker Cli

Install the nvidia-container-runtimeAUR package. Next, register the NVIDIA runtime by editing /etc/docker/daemon.json

and then restart docker.

The runtime can also be registered via a command line option to dockerd:

Afterwards GPU accelerated containers can be started with

or (required Docker version 19.03 or higher)

See also README.md.

With nvidia-docker (deprecated)

nvidia-docker is a wrapper around NVIDIA Container Runtime which registers the NVIDIA runtime by default and provides the nvidia-docker command.

To use nvidia-docker, install the nvidia-dockerAUR package and then restart docker. Containers with NVIDIA GPU support can then be run using any of the following methods:

or (required Docker version 19.03 or higher)

Arch Linux image with CUDA

You can use the following Dockerfile to build a custom Arch Linux image with CUDA. It uses the Dockerfile frontend syntax 1.2 to cache pacman packages on the host. The DOCKER_BUILDKIT=1environment variable must be set on the client before building the Docker image.

Useful tips

To grab the IP address of a running container:

For each running container, the name and corresponding IP address can be listed for use in /etc/hosts:

Remove Docker and images

In case you want to remove Docker entirely you can do this by following the steps below:

Check for running containers:

List all containers running on the host for deletion:

Stop a running container:

Killing still running containers:

Delete containers listed by ID:

List all Docker images:

Delete images by ID:

Delete all images, containers, volumes, and networks that are not associated with a container (dangling):

To additionally remove any stopped containers and all unused images (not just dangling ones), add the -a flag to the command:

Delete all Docker data (purge directory):

Troubleshooting

docker0 Bridge gets no IP / no internet access in containers when using systemd-networkd

Docker attempts to enables IP forwarding globally, but by default systemd-networkd overrides the global sysctl setting for each defined network profile. Set IPForward=yes in the network profile. See Internet sharing#Enable packet forwarding for details.

When systemd-networkd tries to manage the network interfaces created by Docker, this can lead to connectivity issues. Try disabling management of those interfaces. I.e. networkctl list should report unmanaged in the SETUP column for all networks created by Docker.

- You may need to restart

docker.serviceeach time you restartsystemd-networkd.serviceoriptables.service. - Also be aware that nftables may block docker connections by default. Use

nft list rulesetto check for blocking rules.nft flush chain inet filter forwardremoves all forwarding rules temporarily. Edit/etc/nftables.confto make changes permanent. Remember to restartnftables.serviceto reload rules from the config file. See [12] for details about nftables support in Docker.

Default number of allowed processes/threads too low

If you run into error messages like

then you might need to adjust the number of processes allowed by systemd. The default is 500 (see system.conf), which is pretty small for running several docker containers. Edit the docker.service with the following snippet:

Error initializing graphdriver: devmapper

If systemctl fails to start docker and provides an error:

Then, try the following steps to resolve the error. Stop the service, back up /var/lib/docker/ (if desired), remove the contents of /var/lib/docker/, and try to start the service. See the open GitHub issue for details.

Failed to create some/path/to/file: No space left on device

If you are getting an error message like this:

when building or running a Docker image, even though you do have enough disk space available, make sure:

- Tmpfs is disabled or has enough memory allocation. Docker might be trying to write files into

/tmpbut fails due to restrictions in memory usage and not disk space. - If you are using XFS, you might want to remove the

noquotamount option from the relevant entries in/etc/fstab(usually where/tmpand/or/var/lib/dockerreside). Refer to Disk quota for more information, especially if you plan on using and resizingoverlay2Docker storage driver. - XFS quota mount options (

uquota,gquota,prjquota, etc.) fail during re-mount of the file system. To enable quota for root file system, the mount option must be passed to initramfs as a kernel parameterrootflags=. Subsequently, it should not be listed among mount options in/etc/fstabfor the root (/) filesystem.

Docker-machine fails to create virtual machines using the virtualbox driver

In case docker-machine fails to create the VM's using the virtualbox driver, with the following:

Simply reload the virtualbox via CLI with vboxreload.

Starting Docker breaks KVM bridged networking

This is a known issue. You can use the following workaround:

If there is already a network bridge configured for KVM, this may be fixable by telling docker about it. See [14] where docker configuration is modified as:

Be sure to replace existing_bridge_name with the actual name of your network bridge.

Image pulls from Docker Hub are rate limited

Beginning on November 1st 2020, rate limiting is enabled for downloads from Docker Hub from anonymous and free accounts. See the rate limit documentation for more information.

Unauthenticated rate limits are tracked by source IP. Authenticated rate limits are tracked by account.

If you need to exceed the rate limits, you can either sign up for a paid plan or mirror the images you need to a different image registry. You can host your own registry or use a cloud hosted registry such as Amazon ECR, Google Container Registry, Azure Container Registry or Quay Container Registry.

To mirror an image, use the pull, tag and push subcommands of the Docker CLI. For example, to mirror the 1.19.3 tag of the Nginx image to a registry hosted at cr.example.com:

You can then pull or run the image from the mirror:

See also

- Are Docker containers really secure? — opensource.com

“Build once, deploy anywhere” is really nice on the paper but if you want to use ARM targets to reduce your bill, such as Raspberry Pis and AWS A1 instances, or even keep using your old i386 servers, deploying everywhere can become a tricky problem as you need to build your software for these platforms. To fix this problem, Docker introduced the principle of multi-arch builds and we’ll see how to use this and put it into production.

Quick setup

To be able to use the docker manifest command, you’ll have to enable the experimental features.

On macOS and Windows, it’s really simple. Open the Preferences > Command Line panel and just enable the experimental features.

On Linux, you’ll have to edit ~/.docker/config.json and restart the engine.

Under the hood

OK, now we understand why multi-arch images are interesting, but how do we produce them? How do they work?

Each Docker image is represented by a manifest. A manifest is a JSON file containing all the information about a Docker image. This includes references to each of its layers, their corresponding sizes, the hash of the image, its size and also the platform it’s supposed to work on. This manifest can then be referenced by a tag so that it’s easy to find.

For example, if you run the following command, you’ll get the manifest of a non-multi-arch image in the rustlang/rust repository with the nightly-slim tag:

$ docker manifest inspect --verbose rustlang/rust:nightly-slim

{

'Ref': 'docker.io/amd64/rust:1.42-slim-buster',

'Descriptor': {

'mediaType': 'application/vnd.docker.distribution.manifest.v2+json',

'digest': 'sha256:1bf29985958d1436197c3b507e697fbf1ae99489ea69e59972a30654cdce70cb',

'size': 742,

'platform': {

'architecture': 'amd64',

'os': 'linux'

}

},

'SchemaV2Manifest': {

'schemaVersion': 2,

'mediaType': 'application/vnd.docker.distribution.manifest.v2+json',

'config': {

'mediaType': 'application/vnd.docker.container.image.v1+json',

'size': 4830,

'digest': 'sha256:dbeae51214f7ff96fb23481776002739cf29b47bce62ca8ebc5191d9ddcd85ae'

},

'layers': [

{

'mediaType': 'application/vnd.docker.image.rootfs.diff.tar.gzip',

'size': 27091862,

'digest': 'sha256:c499e6d256d6d4a546f1c141e04b5b4951983ba7581e39deaf5cc595289ee70f'

},

{

'mediaType': 'application/vnd.docker.image.rootfs.diff.tar.gzip',

'size': 175987238,

'digest': 'sha256:e2f298701fbeb02568c3dcb9822f8488e24ef12f5430bc2e8562016ba8670f0d'

}

]

}

}

The question is now, how can we put multiple Docker images, each supporting a different architecture, behind the sametag?

What if this manifest file contained a list of manifests, so that the Docker Engine could pick the one that it matches at runtime? That’s exactly how the manifest is built for a multi-arch image. This type of manifest is called a manifest list.

Let’s take a look at a multi-arch image:

$ docker manifest inspect ‐‐verbose rust:1.42-slim-buster

[

{

'Ref': 'docker.io/library/rust:1.42-slim-buster@sha256:1bf29985958d1436197c3b507e697fbf1ae99489ea69e59972a30654cdce70cb',

'Descriptor': {

'mediaType': 'application/vnd.docker.distribution.manifest.v2+json',

'digest': 'sha256:1bf29985958d1436197c3b507e697fbf1ae99489ea69e59972a30654cdce70cb',

'size': 742,

'platform': {

'architecture': 'amd64',

'os': 'linux'

}

},

'SchemaV2Manifest': { ... }

},

{

'Ref': 'docker.io/library/rust:1.42-slim-buster@sha256:116d243c6346c44f3d458e650e8cc4e0b66ae0bcd37897e77f06054a5691c570',

'Descriptor': {

'mediaType': 'application/vnd.docker.distribution.manifest.v2+json',

'digest': 'sha256:116d243c6346c44f3d458e650e8cc4e0b66ae0bcd37897e77f06054a5691c570',

'size': 742,

'platform': {

'architecture': 'arm',

'os': 'linux',

'variant': 'v7'

}

},

'SchemaV2Manifest': { ... }

...

]

We can see that it’s a simple list of the manifests of all the different images, each with a platform section that can be used by the Docker Engine to match itself to.

How they’re made

There are two ways to use Docker to build a multiarch image: using docker manifest or using docker buildx.

To demonstrate this, we will need a project to play. We’ll use the following Dockerfile which just results in a Debian based image that includes the curl binary.

ARG ARCH=

FROM ${ARCH}debian:buster-slim

RUN apt-get update

&& apt-get install -y curl

&& rm -rf /var/lib/apt/lists/*

ENTRYPOINT [ 'curl' ]

Now we are ready to start building our multi-arch image.

The hard way with docker manifest

We’ll start by doing it the hard way with `docker manifest` because it’s the oldest tool made by Docker to build multiarch images.

To begin our journey, we’ll first need to build and push the images for each architecture to the Docker Hub. We will then combine all these images in a manifest list referenced by a tag.

# AMD64

$ docker build -t your-username/multiarch-example:manifest-amd64 --build-arg ARCH=amd64/ .

$ docker push your-username/multiarch-example:manifest-amd64

# ARM32V7

$ docker build -t your-username/multiarch-example:manifest-arm32v7 --build-arg ARCH=arm32v7/ .

$ docker push your-username/multiarch-example:manifest-arm32v7

# ARM64V8

$ docker build -t your-username/multiarch-example:manifest-arm64v8 --build-arg ARCH=arm64v8/ .

$ docker push your-username/multiarch-example:manifest-arm64v8

Now that we have built our images and pushed them, we are able to reference them all in a manifest list using the docker manifest command.

$ docker manifest create

your-username/multiarch-example:manifest-latest

--amend your-username/multiarch-example:manifest-amd64

--amend your-username/multiarch-example:manifest-arm32v7

--amend your-username/multiarch-example:manifest-arm64v8

Once the manifest list has been created, we can push it to Docker Hub.

$ docker manifest push your-username/multiarch-example:manifest-latest

If you now go to Docker Hub, you’ll be able to see the new tag referencing the images:

The simple way with docker buildx

You should be aware that buildx is still experimental.

If you are on Mac or Windows, you have nothing to worry about, buildx is shipped with Docker Desktop. If you are on linux, you might need to install it by following the documentation here https://github.com/docker/buildx

The magic of buildx is that the whole above process can be done with a single command.

$ docker buildx build

--push

--platform linux/arm/v7,linux/arm64/v8,linux/amd64 --tag your-username/multiarch-example:buildx-latest .

And that’s it, one command, one tag and multiple images.

Let’s go to production

We’ll now try to target the CI and use GitHub Actions to build a multiarch image and push it to the Hub.

To do so, we’ll write a configuration file that we’ll put in .github/workflows/image.yml of our git repository.

name: build our image

on:

push:

branches: master

jobs:

build:

runs-on: ubuntu-latest

steps:

- name: checkout code

uses: actions/checkout@v2

- name: install buildx

id: buildx

uses: crazy-max/ghaction-docker-buildx@v1

with:

version: latest

- name: build the image

run: |

docker buildx build

--tag your-username/multiarch-example:latest

--platform linux/amd64,linux/arm/v7,linux/arm64 .

Arch Linux Install Docker Virtualbox

Thanks to the GitHub Action crazy-max/docker-buildx we can install and configure buildx with only one step.

To be able to push, we now have to get an access token on Docker Hub in the security settings.

Once you created it, you’ll have to set it in your repository settings in the Secrets section. We’ll create DOCKER_USERNAME and DOCKER_PASSWORD variables to login afterward.

Now, we can update the GitHub Action configuration file and add the login step before the build. And then, we can add the --push to the buildx command.

...

- name: login to docker hub

run: echo '${{ secrets.DOCKER_PASSWORD }}' | docker login -u '${{ secrets.DOCKER_USERNAME }}' --password-stdin

- name: build the image

run: |

docker buildx build --push

--tag your-username/multiarch-example:latest

--platform linux/amd64,linux/arm/v7,linux/arm64 .

We now have our image being built and pushed each time something is pushed on master.

Conclusion

Arch Linux Install Docker Ubuntu

This post gives an example of how to build a multiarch Docker image and push it to the Docker Hub. It also showed how to automate this process for git repositories using GitHub Actions; but this can be done from any other CI system too.

Install Docker In Arch Linux

An example of building multiarch image on Circle CI, Gitlab CI and Travis can be found here.